Community Moderating — Bringing Our Best

Dispelling some common myths about moderation, and presenting our own moderation approach for Ethereum World.

In light of the Trump ban, far right hate speech, and the plainly weird QAnon conspiracy theories, the world's attention is increasingly focused on the moderation of and by social media platforms.

Our work at AKASHA is founded on the belief that humans are not problems waiting to be solved, but potential waiting to unfold. We are dedicated to that unfolding, and so then to enabling, nurturing, exploring, learning, discussing, self-organizing, creating, and regenerating. And this post explores our thinking and doing when it comes to moderating.

Moderating processes are fascinating and essential. They must encourage and accommodate the complexity of community, and their design can contribute to phenomenal success or dismal failure. And regardless, we're never going to go straight from zero to hero here. We need to work this up together.

We're going to start by defining some common terms and dispelling some common myths. Then we explore some key design considerations and sketch out the feedback mechanisms involved, before presenting the moderating goals as we see them right now. Any and all comments and feedback are most welcome.

We will emphasise one thing about our Ethereum World journey — it makes no sense whatsoever for the AKASHA team to dictate the rules of the road, as we hope will become increasingly obvious in the weeks and months ahead.

Terms and myths

Let's do this.

Terms

"The beginning of wisdom is the definition of terms." An apposite truism attributed to Socrates.

Governing — determining authority, decision-making, and accountability in the process of organizing [ref].

Moderating — the subset of governing that structures participation in a community to facilitate cooperation and prevent abuse [ref].

Censoring — prohibiting or suppressing information considered to be politically unacceptable, obscene, or a threat to security [Oxford English dictionary].

Myth 1: moderation is censorship

One person's moderating is another person's censoring, as this discussion among Reddit editors testifies. And while it's been found that the centralized moderating undertaken by the likes of Facebook, Twitter, and YouTube constitutes “a detailed system rooted in the American legal system with regularly revised rules, trained human decision-making, and reliance on a system of external influence”, it is clear “they have little direct accountability to their users” [ref].

That last bit doesn't sit well with us, and if you're reading this then it very likely doesn't float your boat either. We haven't had to rely on private corporations taking this role throughout history, and we have no intention of relying on them going forward.

Subjectively, moderation may feel like censorship. This could be when the moderator really has gone ‘too far’, or when the subject doesn't feel sufficiently empowered to defend herself, but also when the subject is indeed just an asshole.

As you will imagine, AKASHA is not pro-censorship. Rather, we recognise that the corollary of freedom of speech is freedom of attention. Just because I'm writing something does not mean you have to read it. Just because I keep writing stuff doesn't mean you have to keep seeing that I keep writing stuff. This is a really important observation.

Myth 2: moderation is unnecessary

AKASHA is driven to help create the conditions for the emergence of collective minds i.e. intelligences greater than the sum of their parts. Anyone drawn to AKASHA, and indeed to Ethereum, is interested in helping to achieve something bigger than themselves, and we haven't found an online ‘free-for-all’ that leads to such an outcome.

Large scale social networks without appropriate moderating actions are designed to host extremists, or attract extremists because the host has given up trying to design for moderating. A community without moderating processes is missing essential structure, leaving it little more than a degenerative mess that many would avoid.

Myth 3: moderation is done by moderators

Many social networks and discussion fora include a role often referred to as moderator, but every member of every community has some moderating capabilities. This may be explicit — e.g. flagging content for review by a moderator — or implicit — e.g. heading off a flame war with calming words.

If a community member is active, she is moderating. In other words, she is helping to maintain and evolve the social norms governing participation. As a general rule of thumb, the more we can empower participants to offer appropriate positive and negative feedback, the more appropriately we can divine an aggregate consequence, the more shoulders take up the essential moderating effort. We'll know when we've got there when the role we call moderator seems irrelevant.

Myth 4: moderation is simple enough

Moderating actions may be simple enough, but overall moderating design is as much art as science. It's top-down, bottom-up, and side-to-side, and complex …

Complexity refers to the phenomena whereby a system can exhibit characteristics that can't be traced to one or two individual participants. Complex systems contain a collection of many interacting objects. They involve the effect of feedback on behaviors, system openness, and the complicated mixing of order and chaos [ref]. Many interacting people constitute a complex system, so there's no getting around this in the context of Ethereum World.

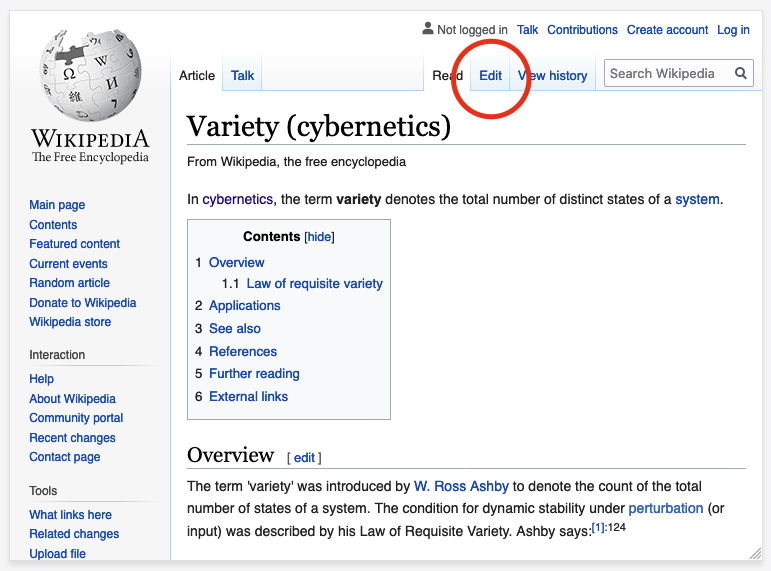

The law of requisite variety asserts that a system's control mechanism (i.e. the governing, specifically the moderating in the context here) must be capable of exhibiting more states than the system itself [ref]. Failure to engineer for this sets the system up to fail. Here are some example failure modes in this respect:

- A team of central moderators that just can't keep up with the volume of interactions requiring their attention

- The value of engaging in moderating processes is considered insufficient

- Moderating processes are perceived as unfair

- Those doing the moderating cannot relate to the context in question

- Moderating processes are too binary (e.g. expulsion is the only punishment available).

Moderating by design

Let's take a look at some of the things we need to take into consideration, various feedback loops, and our moderating goals.

Considerations

There are a number of top-level design considerations [ref]. These include:

Manual / automatic

Human interactions involve subtlety, context, irony, sarcasm, and multimedia; in fact many qualities and formats that don't come easy to algorithmic interpretation. Fully automated moderation isn't feasible today (and perhaps we might hope that long remains the case), so that leaves us with entirely manual moderating processes and computer-assisted moderating processes.

Transparent / opaque

“Your account has been disabled.”

This is all you get when Facebook's automated moderation kicks in. No explanation. No transparency. At AKASHA, we default to transparency, obvs.

Deterrence & punishment

Only when people know about a law can it be effective. Only when people learn of a social norm can it endure. Both the law and social norms deter but do not prevent subversion. Punishment is available when the deterrent is insufficient — in fact it validates the deterrent — and both are needed in moderating processes.

Centralized / decentralized

Decentralization is a means rather than an end of itself [ref]. In this instance, decentralized moderating processes contribute to a feeling of community ‘ownership’, personal agency, and ideally more organic scaling.

Extrinsic / intrinsic motivation

Some moderating processes play out in everyday interactions whereas others require dedication of time to the task. That time allocation is either extrinsically motivated (e.g. for payment, per Facebook's moderators), or intrinsically motivated (e.g. for the cause, per the Wikipedia community). It is often said that the two don't make comfortable bedfellows, but at the same time there are many people out there drawn to working for ‘a good cause’ and earning a living from it.

We are drawn to supporting and amplifying intrinsic motivations without making onerous demands on the time of a handful of community members. Moderating processes should feel as normal as not dropping litter and occasionally picking up someone else's discarded Coke can. When they start to feel more like a volunteer litter pick then questions of ‘doing your fair share’ are raised in the context of a potential tragedy of the commons.

Never-ending feedback

Nothing about moderating is ever static. We can consider five levels of feedback:

1st loop

Demonstrating and observing behaviors on a day-to-day basis is a primary source and sustainer of a community's culture — how we do and don't do things around here. We might call it moderating by example.

2nd loop

This is more explicitly about influencing the circulation of content and the form most people think about when contemplating moderation. A typical form of second-loop feedback is exemplified by the content that has accrued sufficient flags to warrant attention by a moderator — someone with authority to wield a wider range of moderating processes and/or greater powers in wielding them. While it sometimes appears to play second fiddle to corrective feedback, 2nd loop also includes positive feedback celebrating contributions and actions the community would love to see more of.

3rd loop

Community participation is structured by moderating processes. Third-loop feedback may then operate to review and trim or adapt or extend those structures, reviewing members' agency, by regular appointment or by exception.

4th loop

Moderating is a form of governing — the processes of determining authority, decision-making, and accountability. Fourth-loop feedback may then operate such that the outcomes of 1st-, 2nd-, and 3rd-loop feedback prompt a review of community governance, or contribute to periodic reviews.

Legal

When infrastructure is owned and/or operated by a legal entity, that entity has legal responsibilities under relevant jurisdictions that may require the removal of some content. When content-addressable storage is used (e.g. IPFS, Swarm), deletion is tricky but delisting remains quite feasible when discovery entails the maintenance of a search index.

Moderating design goals

We've identified eight moderating design goals. It will always be useful in our future discussions together to identify whether any difference of opinion relates to the validity of a goal or to the manner of achieving it.

Goal 1: Freedom

We celebrate freedom of speech and freedom of attention, equally.

Goal 2: Inclusivity

Moderating actions must be available to all. Period.

Goal 3: Robustness

Moderating actions by different members may accrue different weights in different contexts solely to negate manipulation / gaming and help sustain network health. In simple terms, 'old hands' may be more fluent in moderating actions than newbies, and we also want to amplify humans and diminish nefarious bots in this regard.

Goal 4: Simplicity

Moderating processes should be simple, non-universal (excepting actions required for legal compliance), and distributed.

Goal 5: Complexity

The members and moderating processes involved should produce requisite complexity.

Goal 6: Levelling up

We want to encourage productive levelling up and work against toxic levelling down, for network health in the pursuit of collective intelligence.

Goal 7: Responsibility

Moderating processes should help convey that with rights (e.g. freedom from the crèches of centralized social networks) come responsibilities.

Goal 8: Decentralized

Moderating processes should be straightforward to architect in web 2 initially, and not obviously impossible in the web 3 stack in the longer-term. If we get it right, a visualisation of appropriate network analysis should produce something like the image in the centre here:

This list is by no means exhaustive or final. The conversation about moderation continues, but it needs you! If you think you'd like to be a bigger part of this in the early stages, please get in touch with us. If you feel it is missing something, we also encourage you to join the conversation here and here.

Featured photo credits: Courtney Williams on Unsplash