Vibe Check: Building Transparent Moderation in Web3 Social Media

Social media moderation often feels chaotic, but it doesn't have to be this way. Discover how clear rules, transparency, and user accountability can transform the moderation process for a fairer and more just virtual world.

Let's be honest, social media moderation is like trying to herd cats—it's a mess. There's always some drama going on. Whether it's a journalist getting suspended for no apparent reason, or a CEO banning users left and right, it can be frustrating and confusing for the average user. But it doesn't have to be that way. We can create a better and more transparent moderation process that benefits everyone with a few simple steps.

The Current State of Social Media Moderation

Social media platforms like Twitter and Facebook, as private companies, have the liberty to set their own rules for user conduct. These rules are usually designed to benefit shareholder returns or maximize revenues, often prioritizing profit over transparency.

However, just because something is legal doesn’t mean it’s good for society. Often, it's unclear why certain accounts are suspended or banned, leading to speculation and accusations instead of constructive discussions.

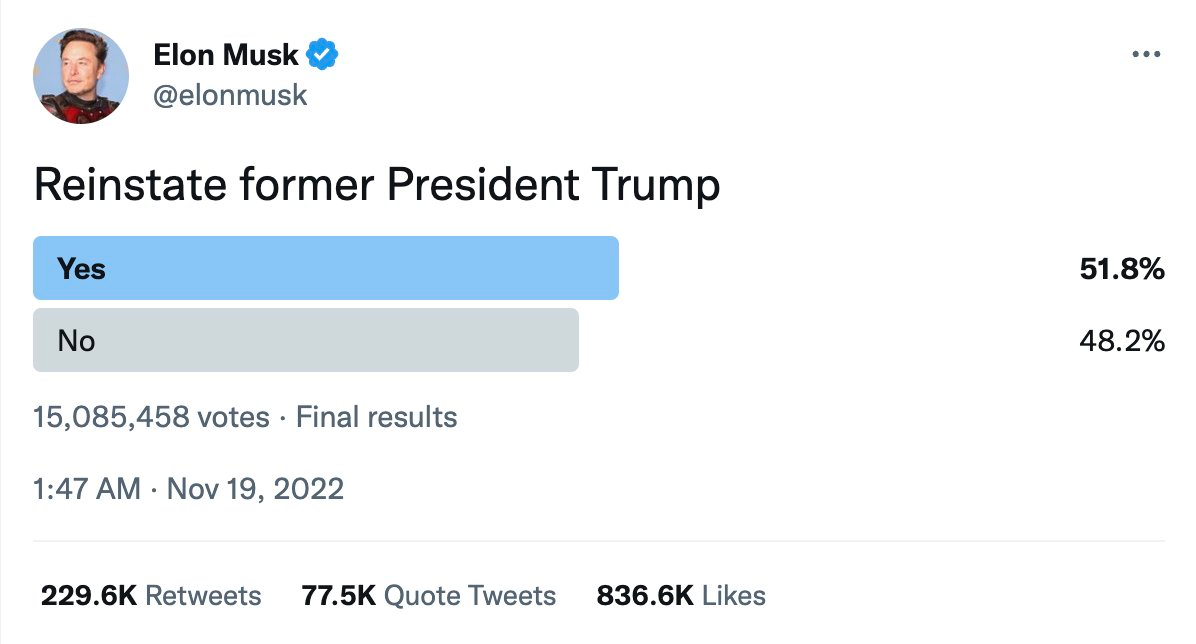

For example, when Elon Musk suspended several journalists on Twitter, the debate focused on whether the suspensions were justified rather than whether they violated any of Twitter's rules. So how can we do things better?

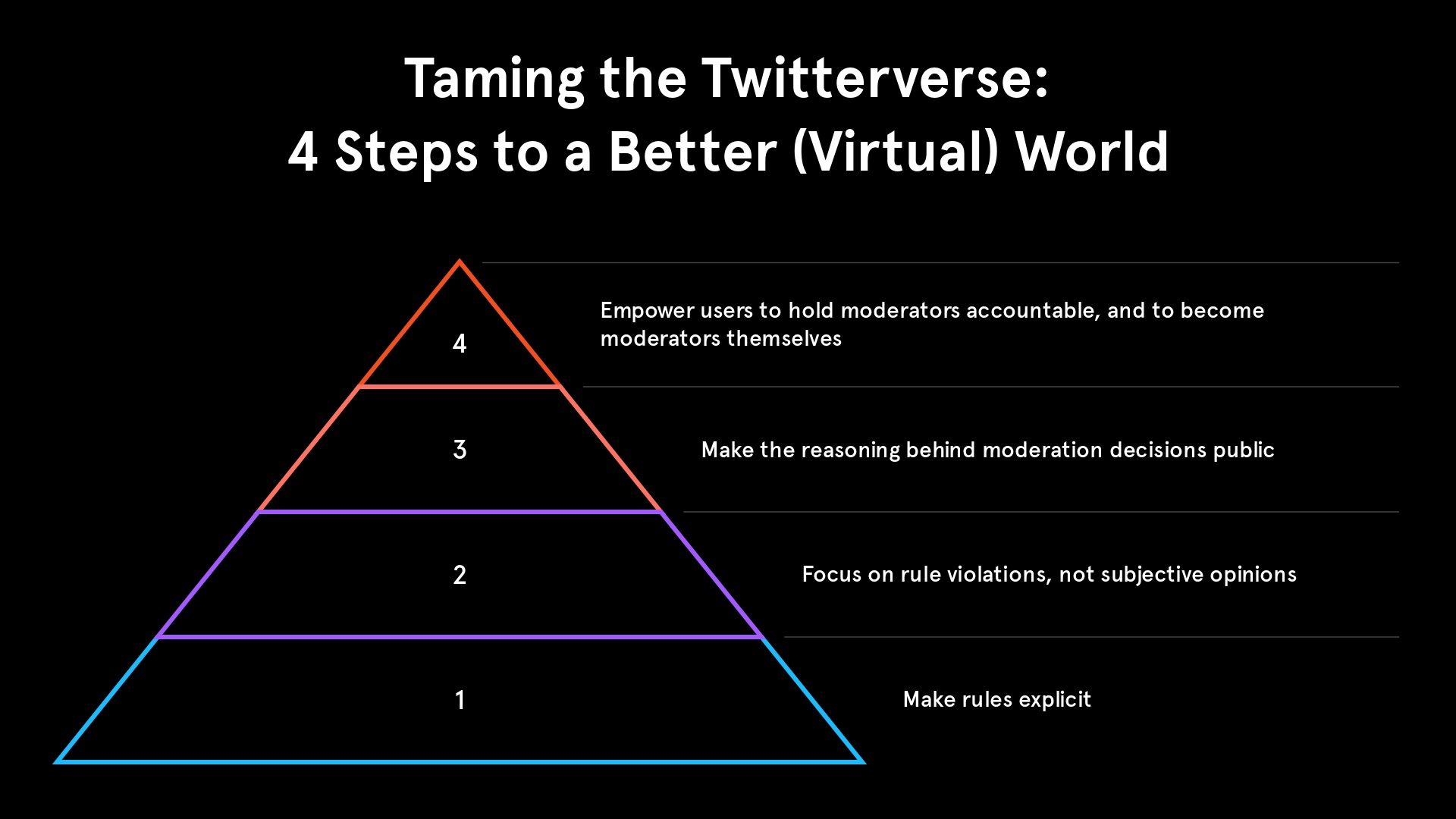

Step 1: Make the rules explicit

This may seem like a no-brainer, but you'd be surprised at how often moderation decisions are made based on ambiguous or subjective rules. By clearly outlining acceptable behaviors, users can make more informed decisions about their conduct and hold moderators accountable for enforcing the rules consistently.

Moderation guidelines set the standards for content moderation. Platforms should make these guidelines public, easily accessible, and written in plain language. Instead of complicated legalese, why not explain it in a way a teenager would understand?

💡 Tip #1 - Regularly review and update rules: Content moderation rules should evolve with the times. Platforms can involve users in the rule-making process by conducting surveys or open forums to gather input on what works and what needs tweaking.

Step 2: Focus on rule violations, not subjective opinions

It's easy to get caught up in the drama of social media and start asking questions like "Should this person be suspended?" or "Do I agree with this decision?".

But the more productive question to ask is whether the person in question violated any of the rules laid out by the platform.

By focusing on whether or not a rule was broken, we can move the conversation away from subjective opinions and towards a more objective analysis of the situation.

For example, shadowbanning—where users’ posts are hidden without their knowledge—remains a major issue, eroding trust in the platform's fairness.

A recent incident highlighted this problem. Last year, Substack users were in for a surprise when they tried to share links on Twitter, only to be met with an error message that disabled retweets, replies, and likes on tweets with Substack links. The error message cryptically stated that "some actions on this tweet have been disabled by Twitter", without explicitly stating which rules were broken.

This ambiguous situation inevitably led to speculation that it might be a response to Substack's recent announcement of their Twitter-like Notes feature, given Twitter's history of silencing rivals.

Step 3: Make the reasoning behind moderation decisions public

When users are suspended or banned, platforms should provide clear explanations for their decisions. This not only allows users to understand the reasoning behind the decisions, but it also holds moderators accountable for their actions. Without this transparency, it's difficult for users to make informed decisions about whether or not to continue using the platform.

Consider the following example: a journalist gets suspended from Twitter for posting a tweet that seems completely benign to the average user. Without any explanation from Twitter, the public is left to speculate about why the suspension occurred. Was it a simple mistake made by a moderator? Was it a false negative spotted by the platform's algorithm? Was the journalist targeted for their views? Without any information from Twitter, we simply don't know.

This lack of transparency not only undermines the credibility of the platform, but also hinders the ability of users to have informed discussions about the rules and policies of the platform. It's like trying to have a meaningful conversation about the rules of a game when you don't even know what the rules are.

💡 Tip# 2 - Conduct transparency reports: Transparency is the name of the game when it comes to accountability. Platforms can publish regular transparency reports that provide insights into their content moderation processes. These reports can include statistics on the number of content removals, bans, and appeals, as well as explanations of the reasons behind these decisions.

Step 4: Empower users to hold moderators accountable, and become moderators themselves

Ultimately, the key to a better moderation process is accountability.

💡 Tip #3 - Allow user feedback and appeals: Giving users the ability to provide feedback and appeal moderation decisions can be a powerful tool in holding moderators accountable. Platforms can set up a "feedback hotline" where users can appeal decisions by presenting their cases for why their content should be reinstated.

💡 Tip #4 - Train and educate moderators: Moderators are the frontline warriors in the content moderation battle, and they need to be equipped with the right skills and knowledge. Platforms can provide regular training to moderators on topics such as identifying bias, understanding cultural nuances, and navigating tricky situations.

💡 Tip #5 - Foster community engagement: Building a sense of community ownership can go a long way in promoting accountability in content moderation. Platforms can create opportunities for moderators and users to engage in open and constructive communication. This can include virtual "town hall" meetings where moderators answer community questions.

How to get involved? 🙌🏽

We need a plurality of minds and ideas to work out how decentralized social networking should evolve. Suppose you are interested in contributing your superpowers to a community of pioneers focusing on a complex but essential challenge for the decentralized web. In that case, the AKASHA Foundation’s community is your place to be! Join us and start making a difference today!